Deep Learning Theory - Optimization

(Was part of MSRA project at OptiML Lab.)

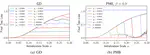

Gradient Descent with Polyak’s Momentum Finds Flatter Minima via Large Catapults

- Updated version accepted to ICML 2024 - 2nd Workshop on High-dimensional Learning Dynamics (HiLD): The Emergence of Structure and Reasoning.

- Preliminary version (under different title “Large Catapults in Momentum Gradient Descent with Warmup: An Empirical Study”) accepted to NeurIPS 2023 Workshop on Mathematics of Modern Machine Learning (M3L) as oral.

- Joint work with Prin Phunyaphibarn (KAIST Math, intern, equal contributions), Chulhee Yun (KAIST AI), Bohan Wang (USTC & MSRA), and Huishuai Zhang (MSRA).

A Statistical Analysis of Stochastic Gradient Noises for GNNs

- Preliminary work accepted to KCC 2022.

- Joint work with Minchan Jeong, Namgyu Ho, and Se-Young Yun (KAIST AI).