A Jointly Efficient and Optimal Algorithm for Heteroskedastic Generalized Linear Bandits with Adversarial Corruptions

Abstract

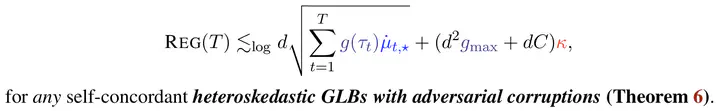

We consider the problem of heteroskedastic generalized linear bandits (GLBs) with adversarial corruptions, which subsumes various stochastic contextual bandit settings, including heteroskedastic linear bandits and logistic/Poisson bandits. We propose HCW-GLB-OMD, which consists of two components: an online mirror descent (OMD)-based estimator and Hessian-based confidence weights to achieve corruption robustness. This is computationally efficient in that it only requires $O(1)$ space and time complexity per iteration. Under the self-concordance assumption on the link function, we show a regret bound of $\tilde{O}\left( d \sqrt{\sum_t g(\tau_t) \mu_{t,\star}} + d^2 g_{\max} \kappa + d \kappa C \right)$, where $\mu_{t,\star}$ is the slope of $\mu$ around the optimal arm at time $t$, $g(\tau_t)$’s are potentially exogenously time-varying dispersions (e.g., $g(\tau_t) = \sigma_t^2$ for heteroskedastic linear bandits, $g(\tau_t) = 1$ for Bernoulli and Poisson), $g_{\max} = \max_{t \in [T]} g(\tau_t)$ is the maximum dispersion, and $C \geq 0$ is the total corruption budget of the adversary. We complement this with a lower bound of $\tilde{\Omega}(d \sqrt{\sum_t g(\tau_t) \mu_{t,\star}} + d C)$, unifying previous problem-specific lower bounds. Thus, our algorithm achieves, up to a $\kappa$-factor in the corruption term, instance-wise minimax optimality simultaneously across various instances of heteroskedastic GLBs with adversarial corruptions.