Abstract

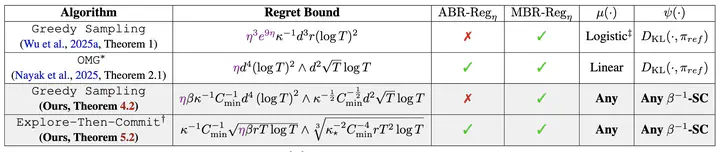

We consider the problem of contextual online RLHF with general preferences, where the goal is to identify the Nash Equilibrium. We adopt the Generalized Bilinear Preference Model (GBPM) to capture potentially intransitive preferences via low-rank, skew-symmetric matrices. We investigate general preference learning with \textbf{\emph{any}} strongly convex regularizer (where $\eta^{-1}$ is the regularization strength), generalizing beyond prior works limited to reverse KL-regularization. Central to our analysis is proving that the dual gap of the greedy policy is bounded by the \textit{square} of the estimation error—a result derived solely from strong convexity and the skew-symmetric nature of GBPM. Building on this insight and a feature diversity assumption, we establish two regret bounds: (1) A simple \texttt{Greedy Sampling} strategy achieves polylogarithmic regret $\tilde{\gO}(\eta d^4 (\log T)^2)$. Crucially, this avoids the exponential dependency on $\eta$ (i.e., $e^{\gO(\eta)}$), partially resolving an open problem by Wu et al. (2025). (2) \texttt{Explore-Then-Commit} achieves $\poly(d)$-free regret $\tilde{\gO}(\sqrt{\eta T})$ by exploiting the low-rank structure; this is the first statistically efficient guarantee for online RLHF in the high-dimensional regime.