Gradient Descent with Polyak's Momentum Finds Flatter Minima via Large Catapults

Abstract

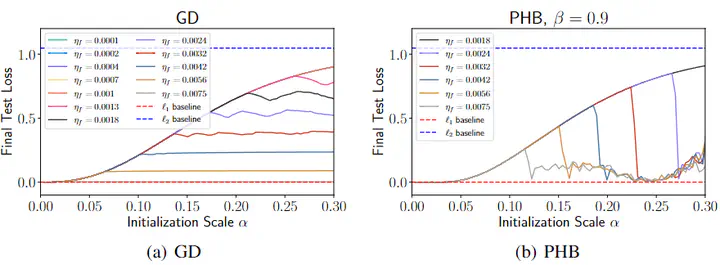

Although gradient descent with Polyak’s momentum is widely used in modern machine and deep learning, a concrete understanding of its effects on the training trajectory remains elusive. In this work, we empirically show that for linear diagonal networks and nonlinear neural networks, momentum gradient descent with a large learning rate displays large catapults, driving the iterates towards much flatter minima than those found by gradient descent. We hypothesize that the large catapult is caused by momentum ``prolonging’’ the self-stabilization effect (Damian et al., 2023). We provide theoretical and empirical support for our hypothesis in a simple toy example and empirical evidence supporting our hypothesis for linear diagonal networks.

Previous title: Large Catapults in Momentum Gradient Descent with Warmup: An Empirical Study