Abstract

The success of reinforcement learning (RL) is often attributed to the seeming phenomenon that deep RL can effectively utilize the underlying low-dimensional representation of the given environment for learning. Theoretically, this is formalized by imposing certain structural assumptions on the MDP. Here, we focus on block MDP (BMDP), in which the contexts are clustered, and latent state transition dynamics govern the observable transitions. Recently, Jedra et al. [6] provided near-optimal guarantees, independent of the usual function approximation framework, for the clustering in BMDP by proving a lower bound for the error rate and proposing a two-step algorithm whose performance guarantee nearly matches the lower bound. This paper empirically validates their theoretical results by implementing and simulating the proposed algorithm in a synthetic BMDP environment. Moreover, we also empirically validate the algorithm’s robustness to random corruption of the trajectories to some level.

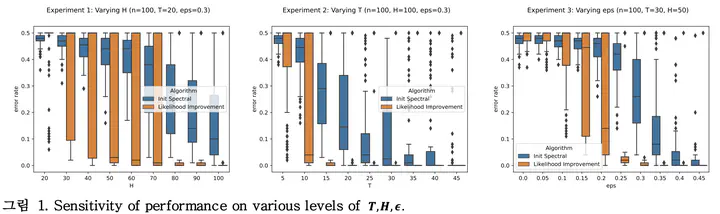

(To be filled out)