Large Catapults in Momentum Gradient Descent with Warmup: An Empirical Study

Abstract

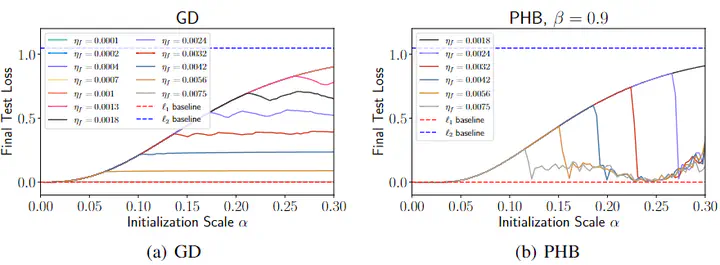

Although gradient descent with momentum is widely used in modern deep learning, a concrete understanding of its effects on the training trajectory still remains elusive. In this work, we empirically show that momentum gradient descent with a large learning rate and learning rate warmup displays large catapults, driving the iterates towards flatter minima than those found by gradient descent. We then provide empirical evidence and theoretical intuition that the large catapult is caused by momentum ``amplifying’’ the self-stabilization (Damian et al., 2023).

Publication

In The NeurIPS 2023 Workshop on Mathematics of Modern Machine Learning (M3L) - Oral Presentation

(To be filled out)